The Reflective Universe Hypothesis

Is the universe a simulation? If so, what does that mean?

The Reflective Universe Hypothesis proposes that if the universe is computational, its very computation entails a lower-order causal manifold whose projection constitutes the universe’s observable output. Discovering or disproving that domain defines the ultimate boundary between science and metaphysics.

This blog post will evolve over time. The first version was completely wrong. Hopefully this version is slightly better but it's probably still full of mistakes. Please send me a DM or tag me on X with any improvements or corrections and I'll update it.

1. Premise: The Universe as a Machine

Every system that maps inputs to outputs through lawful state transitions is, by definition, a machine. The universe fits this definition perfectly: it evolves deterministically (or probabilistically) according to physical laws that can be expressed as computational rules. The universe, therefore, is a machine running code.

2. Code, Causality, and Computation

If the universe is a machine, then its laws are the code, and all matter, energy, and life are the result of executing that code. Humans, as emergent products of those computations, are computational machines ourselves. We have also been designing and building our own computational machines, such as CPUs, GPUs, and quantum and thermodynamic computers. These machines can model parts of the universe itself in various levels of detail, simulating gravity, light, and chemistry. They can even generate simulated videos like this:

This video was generated by the Veo 3.1 AI model on Google Flow.

3. Emulators vs. Simulators

A simulator reproduces the behavior of a system according to a model of its rules. A simulator says, “If I apply these equations or constraints, I can predict what the system will do.” It doesn’t need to mimic the internal structure of the system, only the outcomes. That’s how flight simulators work: they replicate how flying feels and responds, not how air molecules actually collide with the wings. It's also how you would describe this AI generated video of a sparrow.

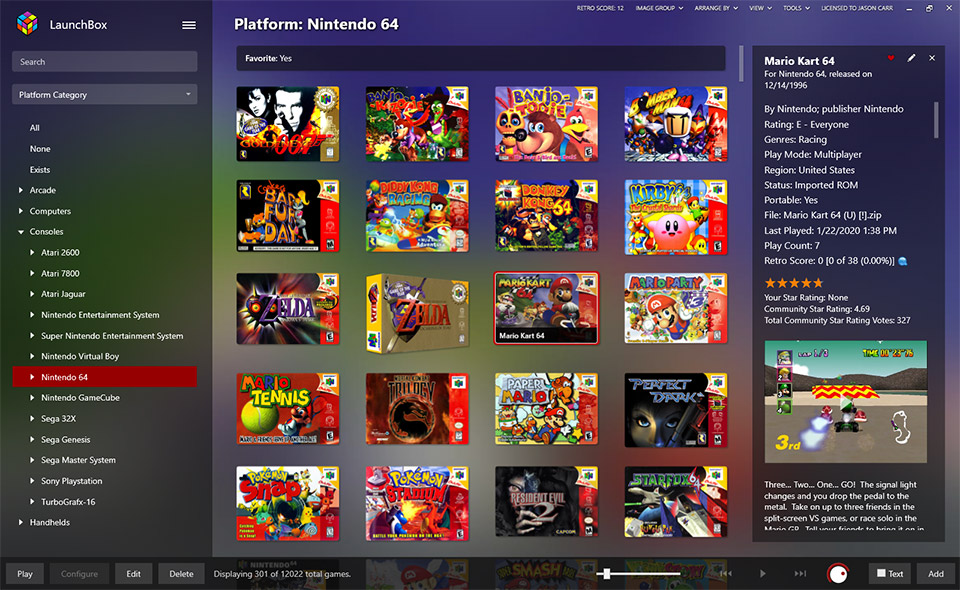

An emulator, by contrast, reproduces the underlying mechanisms closely enough that the same processes can run inside it. A game console emulator doesn’t imitate what a game looks like; it re-creates the console’s circuitry so that the original code executes as if it were on native hardware. It models being, not just behavior.

Our machines are capable of both simulating and emulating other machines. A Nintendo 64 emulator is a virtual game console running on your computer. Each layer is a lawful system within a lawful system. This nesting demonstrates that a computational universe can contain subsystems capable of reproducing its own causal grammar. Each level reflects a subset of the causal space defined by the lower one.

4. The Taxonomy of Simulation

You can think about three fundamentally different targets of simulation:

- Ontological simulation: reproduces the causal substrate itself (laws of being).

- Phenomenological simulation: reproduces experience or appearance (what it’s like).

- Epistemic simulation: reproduces knowledge or understanding (what is known or inferred).

You can extend the framework slightly with a few more specialized categories, though these are often just hybrids:

- Pragmatic simulation: built for function, not fidelity. For example, engineering or economic models that imitate enough of reality to optimize outcomes, not to reproduce truth.

- Aesthetic simulation: built for expression or affect: art, narrative, theater, AI video, etc. These evoke experience without aiming at causal or epistemic truth.

- Ethical or normative simulation: built to test value systems or moral choices (trolley problems, social experiments).

- Reflexive simulation: models the act of modeling itself (meta-simulations, agent-based epistemic games, or philosophical thought experiments).

You can even categorize the same simulation in different ways depending on what you take to be the object of the simulation. How would you categorize the AI "simulation" of a sparrow above?

If you focus on what the AI produces (a visible, audible experience that imitates perception), then it fits the phenomenological category: it simulates what it’s like to see a sparrow and a crowd in motion.

If you focus on what the AI is doing internally (mapping statistical relationships between text and video frames without any sensory “experience” or physics) then it’s an epistemic simulation: a symbolic model of what it knows about how a scene should look.

You can think of it as layered:

- Epistemic core: probability distributions learned from data.

- Phenomenological surface: sensory illusion produced for you.

A "magic trick" is another kind of sensory illusion. Perhaps a magic trick could even be described as a localized, performative simulation of an impossible causal event. So what this tells us is that not all simulations reveal truth. Some simulations are even intentionally designed to deceive, whether they're a magic trick performed in front of an audience, or an AI-generated video making the rounds on social media.

When we refer to the possibility of our universe being a simulation, we are talking about an ontological simulation. Something with precise causal laws, and not just the appearance of what those laws might produce.

5. We Cannot Simulate Our Own Universe

Even with every atom of our cosmos converted into computational machinery, it would be impossible to reproduce our own universe in full depth. The information contained in even a grain of sand already exceeds the total number of bits available in the observable universe. A perfect one-to-one simulation would require more storage and energy than reality itself can ever provide.

This isn’t just a hardware problem. Quantum mechanics makes the number of possible states grow exponentially with every additional particle. A complete reproduction of all wavefunctions, amplitudes, and interactions would need infinite precision. That means the universe can never contain a perfect copy of itself.

What we can do, however, is simulate simplified universes. We can drop or compress some of our own laws. We can ignore quantum effects, treat particles as continuous fields, or use coarse-grained averages instead of subatomic detail. This process could be called ontological compression: preserving causal consistency while discarding unnecessary depth.

Such compressed worlds can still be rich. Within them, simple rules can produce emergent chemistry, weather, or even life-like dynamics. But these are approximations, not self-contained realities. Their laws are shadows of ours.

If you follow this line of reasoning, then our physical laws might be a shadow of some deeper, more complex substrate. If a “lower-order” universe ever simulated us, they may have performed the same act of compression: carefully choosing a subset of its own laws capable of generating a coherent, stable world like ours.

6. The Reflective Universe Hypothesis

This "computational stack" might implicitly define a lower-order causal manifold. In other words, the rules of this universe might encode not only what happens within it, but that what happens within might only be a representation of what lies "beneath" it. If anything does exist "beneath", it must be lower-order by definition: just as the substrate of the universe exists one level below that of the CPU, and the CPU is one level below the virtual CPU, which is one level below the game program.

7. Implications for Existence and Observation

If the universe functions as a computationally closed system, then all observable phenomena, including our consciousness and subjective experience, represent the output states of its causal program. Our reality is the realized execution trace of that program. (And of programs that write other nested programs, and so on.)

The formal structure of the laws governing this universe therefore imply, by inference rather than proof, the existence of a meta-causal domain, a lower-order causal layer whose relations generate the structure that our universe enacts. If this is true, then in this framing, the phenomena we observe might not even be the primary operations of that domain, but only a representation.

Our consciousness and subjective experience might even only be a representation of what it is actually like to have a "lower-order subjective experience", if such a thing is possible.

Controversial take: If God is real, then this is how you might describe what it is like to be God. And if God is not real, this is still how you might describe what it would be like to be God.

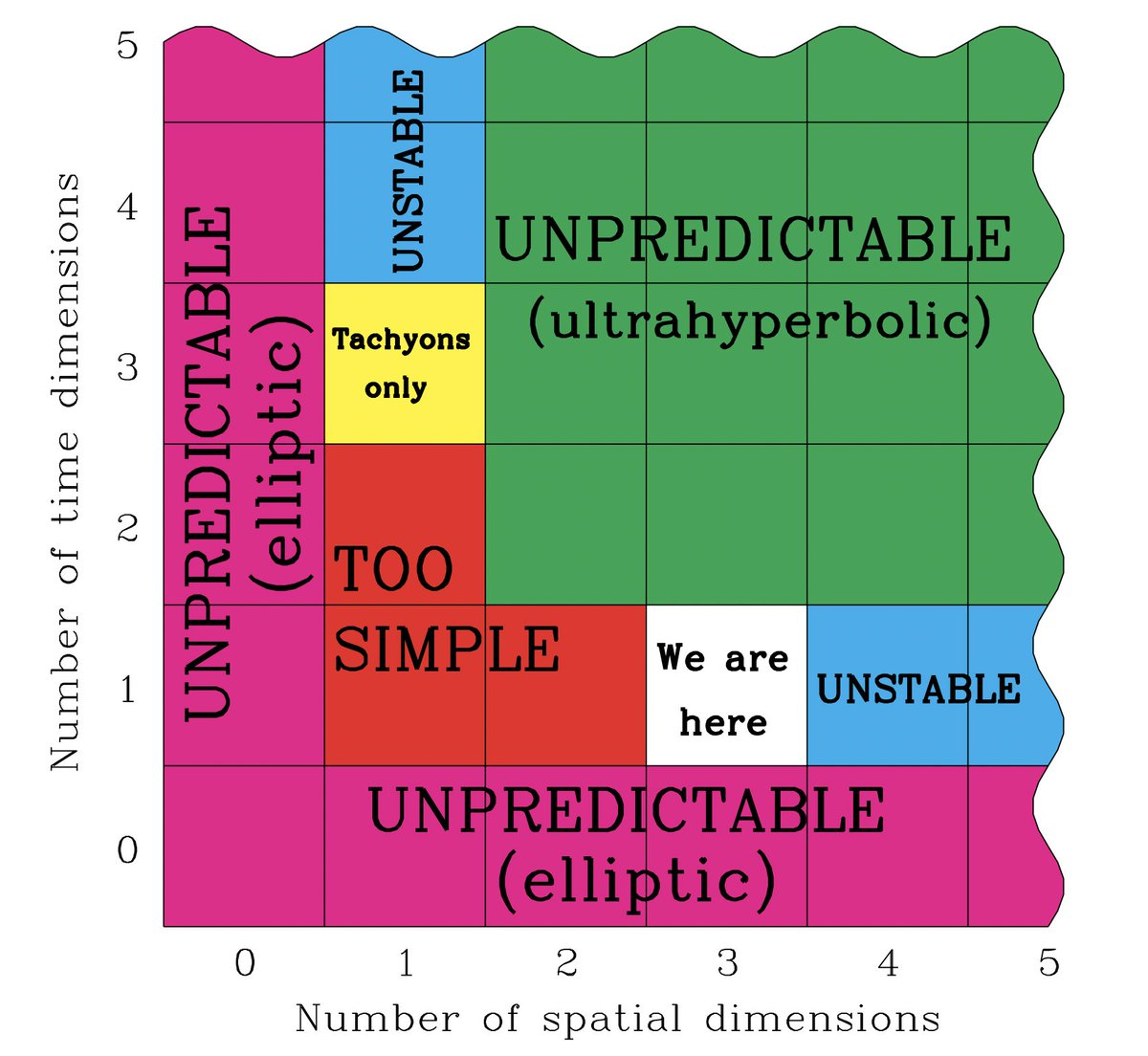

It's also fun to think about higher numbers of spatial dimensions. We simulate our 3D world and view the result through a 2D grid of pixels on a screen. Is it possible for a universe to have 4 spatial dimensions, and for entities within that universe to view the results of their own simulations as 3D voxels?

Probably not:

Another possibility is that our universe could be an emulation and not a simulation. Our physical laws might have been "code" that originally ran in one universe, but were ported to run accurately somewhere else.

Humans like to prevent species from going extinct through our conservation efforts. It's fun to think about a lower-order universe where entities try to prevent universes from "going extinct" by running them on an emulator.

If you follow this line of reasoning even further, then it's unlikely that our universe is maxing out the resource constraints of any lower-order universe.

Any entity that chooses to simulate an universe might have to weigh up a lot of options, just like we do. There could be lower-order versions of "economics" and "energy budgets", where they might have chosen to put those resources towards something else.

And of course, the concept of "time" doesn't exist "outside" or "beneath" our universe. At least, that's what they say. Maybe there's actually some lower-order causal phenomenon that is a distant cousin of what we call time.

If not, then throw the whole theory out because nothing in this blog post makes sense without it.

8. Gödel and the Loophole

Gödel's incompleteness theorem tells us that any formal system cannot prove its own consistency. But this limit applies only to closed systems. Once a system gains sensory feedback, such as interaction with its substrate, it can empirically infer the existence of lower-order structure. This is how a program can monitor the CPU that it is running on, or react to user input from a keyboard. It is also how we are able to observe the universe that "runs" us. Even if AI expands throughout the universe and causes the universe to "wake up" as a universal conciousness, this intelligence would still be within the same ontological order as ours, just on a much grander scale. However, it might someday be able to inspect its own substrate far more deeply than we have ever been able to, and it might possibly detect signals of lower-order causality.

9. The Test of Causal Closure

If the universe is fully self-contained, every phenomenon must ultimately be explainable by its internal laws. If there is structured information that violates those laws, that would be evidence of some external input: a lower-order signal.

10. A Future Experiment

An advanced civilization or AI might choose to approach this scientifically:

- Map and verify all physical laws to the highest achievable precision.

- Monitor the entire universe for any signal, pattern, or event that violates those laws.

- If no violations occur: the universe is likely to be causally closed.

- If a structured, law-breaking signal is detected: the universe might be receiving lower-order input. We might have found empirical evidence of an agent that operates "beneath" us. (Or we might have just discovered a new physical law.)

This would be the ultimate experiment. If SETI is the search for a signal from beyond, then this would be a multi-trillion year search for the signal from "below". It is the final question that any intelligent system might ask once it has answered all others.

Formal Summary of Core Ideas

1. Definitions

- Computational Universe \( U \): A causally closed system governed by deterministic or probabilistic transition rules \( f \).

- Higher-Order Manifold \( H \): The complete set of all possible state transitions consistent with \( f \).

- Causal Closure: A property of \( U \) where all internal phenomena are fully explained by \( f \) and prior states.

2. The Reflective Universe Hypothesis (RUH)

Any computationally closed universe \( U \), by virtue of its lawful structure, implicitly defines a lower-order causal manifold \( H \).

If any external reality exists, it must occupy \( H \) and be lower-order relative to \( U \).

Evidence for such a domain might manifest as structured information unexplainable by \( f \).

3. Gödelian Boundary and Empirical Loophole

- Formal systems cannot prove their own consistency (Gödel).

- Physical systems with sensory feedback can infer lower-order realities through empirical correlation (observation as a meta-channel).

4. The Causal Closure Experiment

- Objective: Determine if the universe is fully self-contained or receives exogenous input.

- Method:

- Derive complete physical laws \( f \).

- Collect data across all measurable scales for deviations from \( f \).

- Identify structured anomalies inconsistent with \( f \).

- Interpretation:

- No deviation → universe is probably causally closed.

- Structured deviation → might be existence of external input (lower-order causality).

5. Implications

- A positive result could imply that our universe participates in a hierarchy of computation: a machine within a greater machine.

- A negative result might support the hypothesis of total causal closure: the universe as a complete self-executing program with no inputs.

6. Status of the Hypothesis

The RUH remains firmly non-empirical. It is simply an attempt at exploring whether our universe’s causal structure is fully self-contained, and if it might reflect a lower-order meta-causal domain.